System Overview

Hardware Information

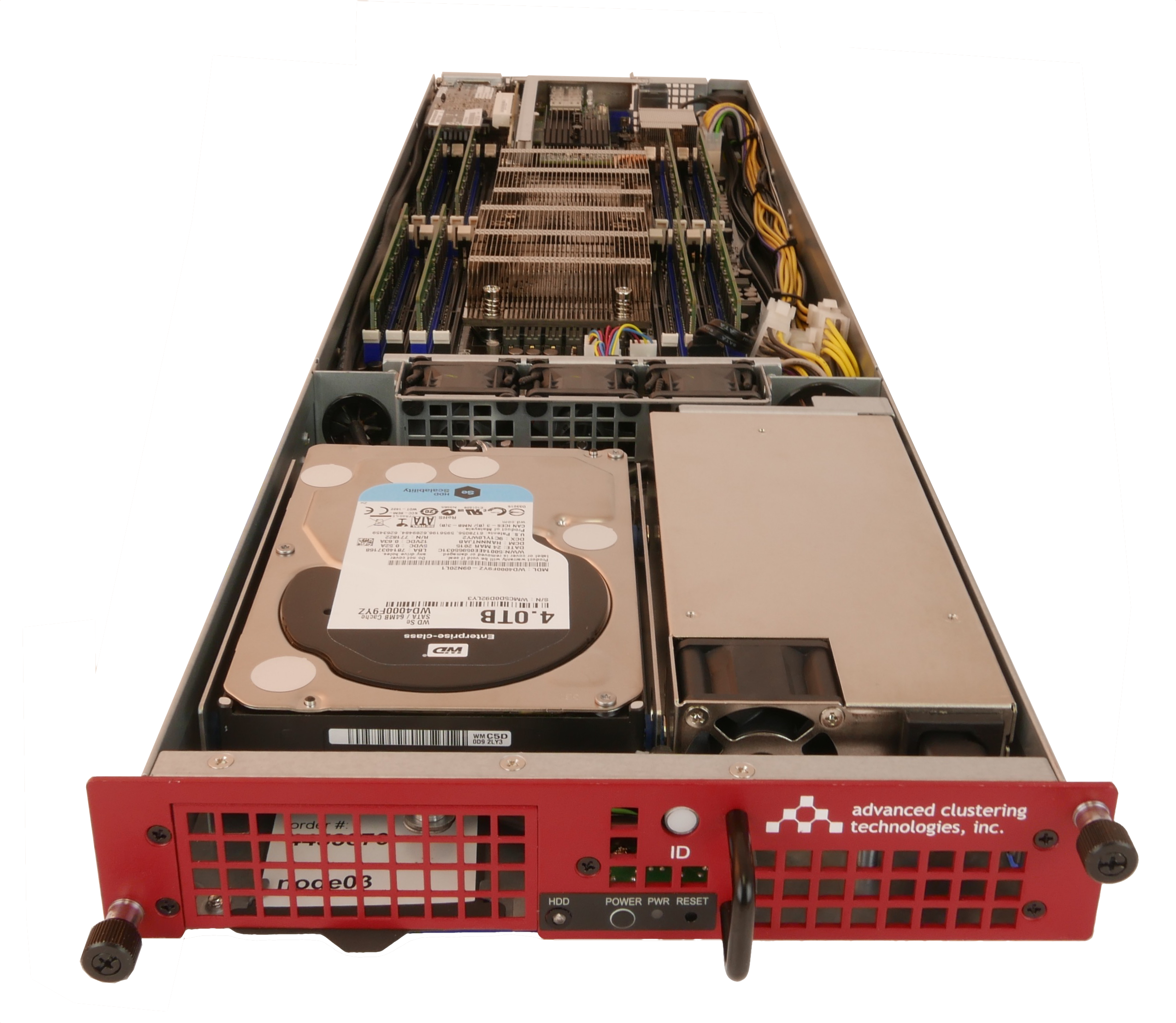

The ACTnowHPC cluster consists of the latest hardware to make sure that your compute jobs run quickly and efficiently. Advanced Clustering knows how to build and configure the best of breed system. After all, we've been building, installing and deploying HPC cluster for our customers for more than 15 years.

Unlike other cloud services, all of the systems that will be running your jobs are running on bare metal hardware, with no virtualization system to eat resources, and no extra software or services taking away compute cycles from your HPC work.

High Performance Networks

The ACTnowHPC system uses multiple networks:

| Network | Features | Purpose |

|---|---|---|

| FDR InfiniBand | 56Gb/s with sub 1µs latencies | Message passing (MPI) inter-node communications, and high speed storage access on compute nodes. |

| 40Gb Ethernet | 40Gb/s standards-based Ethernet | Used for login node VM hosts, and filesystem access. |

| 1Gb Ethernet | Standard Ethernet | Management network |

Filesystems

Each customer on the ACTnowHPC service has their own dedicated storage volumes. Volumes are placed on our high-performance storage servers and connected via both FDR InfiniBand and 40Gb Ethernet.

Login Nodes

Login nodes are the only virtualized component in the ACTnowHPC system. These login nodes are not for running jobs, but for you to compile code, submit jobs, edit files, etc. Each login node is unique and dedicated to each customer.

An ACTnowHPC customer has their own dedicated login node that is a virtual machine running on our Pinnacle 2FX3602 hardware. We closely monitor the number of virtual machines per system and maintain the optimal balance of performance.

You can find out more about our login nodes here.